Cloud Rendering vs Edge Processing: When Users Complain About Lag – Which Scales Better for Digital-Twin Platforms?

Taher Pardawala June 23, 2025

Choosing between cloud rendering and edge processing for digital-twin platforms comes down to balancing cost, latency, and scalability. Here’s what you need to know:

- Cloud Rendering: Great for scalability with minimal upfront costs. However, it comes with higher latency and ongoing expenses like data transfer and compute fees.

- Edge Processing: Offers ultra-low latency and better data privacy by processing locally. But it requires higher initial investment and more complex infrastructure management.

- Hybrid Models: Combine the strengths of both, balancing responsiveness and scalability by dynamically distributing tasks between cloud and edge.

Quick Comparison

| Factor | Cloud Rendering | Edge Processing |

|---|---|---|

| Upfront Costs | Low | High |

| Ongoing Costs | High | Lower |

| Latency | Higher (remote delays) | Lower (local processing) |

| Scalability | Excellent | Limited |

| Data Privacy | Moderate | High |

| Infrastructure | Easier to manage (centralized) | Complex (distributed) |

Key Takeaway

If your platform needs to handle real-time interactions with minimal delays, edge processing is the better choice. For scalability and lower initial costs, cloud rendering works well. A hybrid approach can deliver the best of both worlds, especially for platforms with varying task demands.

Now, let’s dive deeper into how each method works, their benefits, and their drawbacks.

Developer’s Guide to Edge Computing vs Cloud Computing | A Technical Comparison

Understanding Cloud Rendering

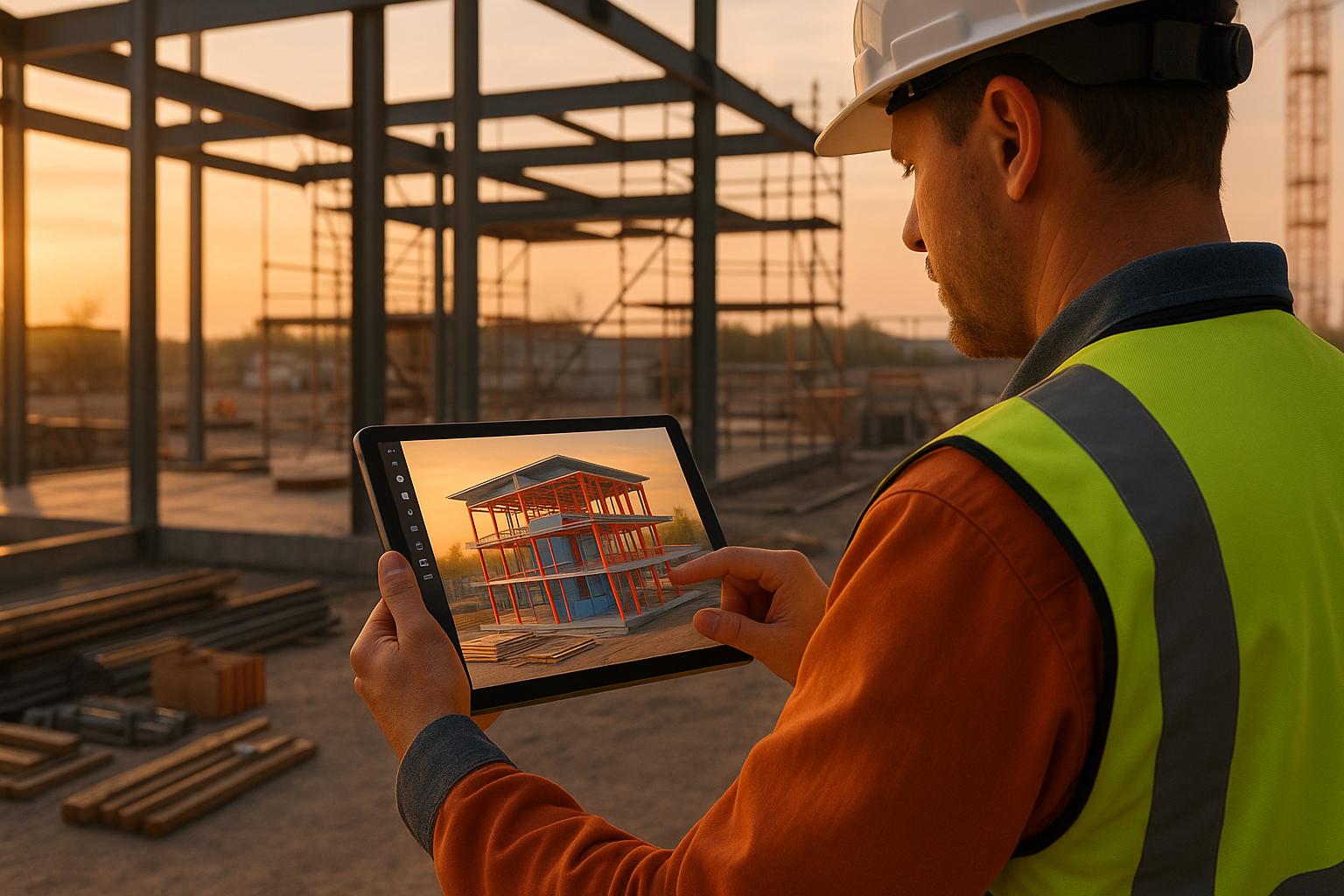

Cloud rendering moves the heavy lifting of graphics processing from your local device to powerful remote servers, which then stream the finished visuals back to your screen.

What is Cloud Rendering?

At its core, cloud rendering uses centralized GPUs to handle intensive graphics processing. This allows platforms like digital twins to manage complex 3D models, simulations, and data visualizations. Here’s how it works: IoT devices send sensor data to the platform, which processes it using AI and machine learning algorithms in the cloud. The platform then renders the digital twin and streams it to the user’s device [2].

For example, INVISTA partnered with AWS to create digital twins for its manufacturing operations. By using cloud processing, they gained a detailed digital overview of their systems [2]. The distributed nature of cloud servers also speeds up rendering tasks significantly.

Understanding this process helps lay the foundation for exploring both the advantages and challenges of cloud rendering.

Benefits of Cloud Rendering

Cloud rendering brings several advantages that make it a compelling choice for handling resource-intensive tasks.

One of the biggest perks is access to enterprise-grade GPUs. These high-performance graphics cards, housed in data centers, can tackle sophisticated digital-twin visualizations that would overwhelm standard laptops or tablets. For most organizations, deploying such powerful hardware across all devices would be prohibitively expensive.

Another benefit is automatic resource scaling. Cloud platforms adjust resources based on real-time demand, which helps cut costs during periods of low usage.

Centralized management is another game-changer. Software updates, security patches, and maintenance are handled on the server side, eliminating the need to manage hundreds – or even thousands – of individual devices. For instance, Carrier, a company specializing in building and cold-chain solutions, uses AWS IoT services to power its platform, carrier.io, where it models and integrates digital twins seamlessly [2].

Cloud rendering also reduces upfront hardware costs. Startups and growing businesses can rely on affordable, standard devices while leveraging the cloud’s processing power instead of investing in expensive workstations. On average, mid-sized companies allocate $12 million annually to cloud services, which accounts for roughly 30% of their IT budgets [4].

Additionally, many cloud rendering platforms provide 24/7 customer support, offering expert assistance that may be beyond the capacity of in-house IT teams [3].

Drawbacks of Cloud Rendering

While cloud rendering offers plenty of benefits, it comes with its own set of challenges.

One major issue is network latency. For digital-twin platforms that require real-time interaction, the time it takes for data to travel back and forth can cause noticeable delays, making interfaces feel less responsive.

Bandwidth demands are another concern. Streaming high-quality graphics, especially detailed 3D visualizations, requires substantial data transfer. During peak times, this can strain network infrastructure and lead to higher operational costs.

Data transfer costs can also climb quickly. Cloud providers typically charge for both compute time and data egress, which can become expensive for platforms with a large number of active users. For example, Fox Renderfarm charges between $0.0306 and $0.051 per core hour for CPU processing and between $0.90 and $1.80 per node per hour for GPU processing [3]. For businesses with continuous rendering needs, these costs can add up faster than investing in hardware.

Security remains a pressing concern. Even with encryption protecting data in transit, some organizations hesitate to send sensitive industrial information to the cloud, fearing potential risks associated with their proprietary data leaving on-premises systems.

Additionally, cloud rendering might not support certain specialized industrial plugins, limiting its usability for some niche applications.

These challenges explain why many platforms choose to combine cloud rendering with edge processing to strike a balance between performance and cost-efficiency.

Edge Processing: Local Computing Power

Expanding on the concept of cloud rendering, edge processing offers a different approach by bringing computational tasks closer to users. Rather than relying on distant servers, edge processing uses local device GPUs or nearby edge servers to manage rendering and data processing right at the source.

What is Edge Processing?

Edge processing shifts the heavy lifting of computation away from centralized cloud systems to local devices or edge servers. This approach uses onboard GPUs or nearby infrastructure to handle tasks like graphics rendering and data analysis. For digital-twin platforms, this means sensor data can be processed and visualized on-site, leading to faster and more responsive interactions.

According to Gartner, by 2025, over 50% of enterprise-managed data will be created and processed outside traditional data centers or cloud environments [6]. This trend highlights the growing need for faster, more reliable processing that doesn’t rely solely on internet connectivity.

With this foundation in mind, let’s look at how edge processing benefits digital-twin applications.

Benefits of Edge Processing

Edge processing offers several advantages, making it an appealing option for digital-twin technology that demands real-time responsiveness.

One of the standout benefits is ultra-low latency. By eliminating the delays caused by sending data back and forth to the cloud, edge processing ensures quicker responses. This is especially critical in industrial settings where even milliseconds can make a difference. For example, Hyperbat, a vehicle battery manufacturer, uses a 3D digital twin powered by edge computing to enable real-time collaboration between design, engineering, and manufacturing teams. Nvidia‘s edge computing solutions integrate 3D CAD models into factory operations, allowing seamless updates and data exchange in real time [5].

Another significant advantage is improved data privacy and security. Sensitive operational data stays within local networks rather than being transmitted to external cloud servers, reducing the risk of interception or breaches. Industries such as energy, water infrastructure, and manufacturing, which handle critical data, particularly benefit from this localized approach [7].

Lower bandwidth costs and increased resilience are additional perks. By running data-intensive applications locally, organizations can reserve wide area network (WAN) capacity for essential traffic. Moreover, edge systems can operate independently of internet connectivity, ensuring critical digital-twin functions remain available even during ISP outages or natural disasters. That said, edge sites do face reliability challenges, averaging 5.39 outages over 24 months, slightly higher than data centers’ 4.81 outages [6].

Drawbacks of Edge Processing

While edge processing has clear benefits, it also comes with challenges that organizations must address.

Management complexity is a major hurdle. Unlike centralized cloud systems where updates and maintenance occur in one location, edge systems require coordination across multiple sites. Managing dozens or even hundreds of edge locations demands sophisticated tools like configuration management databases (CMDBs) and data center infrastructure management (DCIM) platforms, but the process remains intricate [6].

High upfront costs for edge hardware can also be a barrier. Each edge location needs its own processing equipment, which can be expensive compared to shared cloud resources. However, prefabricated modular data centers (PMDCs) offer a potential solution, reducing deployment costs by up to 30% and speeding up implementation by the same margin compared to traditional setups [6].

Limited local processing power is another concern. Unlike cloud servers, which can scale resources as needed, edge devices have fixed capabilities. This limitation can make it challenging to handle complex digital-twin visualizations, potentially forcing compromises in quality or speed.

Compatibility issues also arise due to the variety of device types and operating systems used in edge deployments. Ensuring consistent performance across different hardware configurations requires careful planning and often necessitates maintaining multiple versions of software.

Finally, security vulnerabilities are an increasing concern. As edge networks grow, they create more entry points for potential attacks. Gartner estimates that 25% of edge networks will experience breaches by 2025, a sharp increase from less than 1% in 2021 [6]. To mitigate these risks, organizations need robust security measures, including encryption and strict access controls at every edge location.

These challenges explain why many organizations opt for hybrid models, combining edge and cloud processing to strike a balance between performance, cost, and operational complexity.

Hybrid Approaches: Using Both Cloud and Edge

Instead of picking between cloud rendering and edge processing, many digital-twin platforms are thriving with hybrid approaches that dynamically split workloads based on real-time conditions. These systems automatically determine whether tasks should be handled on local edge devices or in the cloud, striking a balance between performance and cost. By combining the quick response times of edge processing with the scalability of cloud rendering, hybrid systems effectively tackle earlier challenges.

The global edge computing market is projected to grow from $36.5 billion in 2023 to $87.3 billion by 2027. Meanwhile, Gartner estimates that global spending on public cloud services will hit $597.3 billion by the end of 2024 [9].

How Hybrid Render Pipelines Work

Hybrid pipelines take the strengths of both cloud and edge processing, optimizing workload distribution in real time. Acting like traffic controllers, they assess factors such as network speed, device capability, task complexity, and system load to decide the best location for processing. For instance, when a digital-twin platform receives a rendering request, the system evaluates local device performance and network conditions. Simple tasks, such as rotating 3D models or visualizing sensor data, might be processed at the edge, while more demanding simulations requiring heavy computational power are sent to cloud GPUs.

Hybrid edge-cloud models handle immediate tasks at the edge while offloading data-heavy or complex processes to the cloud [9].

This approach ensures low latency for time-sensitive tasks and leverages cloud scalability for more intensive computations. The system is adaptive – if network conditions worsen, more tasks are processed locally; if local devices are overwhelmed, the cloud takes over.

Real-World Hybrid Examples

The versatility of hybrid architectures is evident across industries. Autonomous vehicles, for example, use edge computing for real-time tasks like obstacle detection and navigation, while the cloud manages software updates, long-term data storage, and advanced analytics. In smart cities, edge computing powers traffic signal controls, environmental monitoring, and public safety, while the cloud supports broader planning and analysis. In manufacturing, edge computing handles real-time machine control and monitoring, with the cloud taking care of data storage and complex analytics [9].

One standout example is the IoTwins project, funded by the European Union from 2019 to 2022. This initiative developed a three-tier hybrid digital-twin model, combining IoT Twins for lightweight local controls, Edge Twins for plant-level management, and Cloud Twins for parallel simulations and deep learning. The project showcased how hybrid systems can meet the rigorous demands of industrial applications [13].

Building such flexible systems requires advanced tools.

Tools That Enable Hybrid Systems

Creating and managing hybrid systems depends on orchestration tools that coordinate workloads across diverse environments. Technologies like Kubernetes and Docker, alongside CMDB and DCIM platforms, ensure consistent application performance across both edge devices and cloud servers.

Seamless workload migration between private and public clouds allows systems to meet high computing, cost, and performance demands [8].

AI and machine learning are increasingly central to hybrid systems, with 67% of successful deployments now incorporating these technologies. AI/ML helps optimize workload distribution and predict system requirements, making operations smoother and more efficient [11].

For organizations aiming to adopt hybrid digital-twin platforms, the key is understanding which tasks benefit most from edge processing versus those that thrive in the cloud. This distinction enables systems that deliver responsive performance while keeping costs in check.

sbb-itb-51b9a02

Latency vs Cost Analysis

When it comes to hybrid systems, the trade-offs between latency and cost become even more apparent. As we’ve discussed earlier, performance and cost are closely linked, making it essential to strike the right balance when designing scalable digital-twin platforms. Founders face a critical decision: whether to prioritize speed or cost when choosing between cloud rendering and edge processing. Each approach has distinct pricing models, latency implications, and performance characteristics.

Research shows that cloud services often come with a 20%–30% price increase [14] and significant resource inefficiencies, underlining the importance of optimizing costs.

Cloud Rendering: Lower Initial Investment, Higher Latency

Cloud rendering is particularly appealing to startups due to its pay-as-you-go pricing, which helps maintain cash flow without requiring large hardware investments upfront.

That said, the ongoing expenses – such as compute power, storage, and data transfer fees [14] – can add up quickly. For platforms handling substantial 3D data and real-time sensor information, networking fees can become a major financial burden. Latency is another key challenge; data must travel to and from remote data centers, causing delays that can frustrate users in applications requiring real-time responses.

"The wireless link is always the most expensive link in the network." – Sam Fuller, Senior Director of Marketing for AI Inferencing, Flex Logix [16]

While cloud rendering offers scalability, the associated costs – like subscription fees, data transfer charges, security measures, and potential vendor lock-in – can make it a less predictable option financially [15].

Edge Processing: Faster Performance, Higher Upfront Costs

Edge processing, on the other hand, brings data processing closer to the source, significantly reducing latency. This makes it a better fit for interactive digital-twin applications that demand instant responsiveness.

"The way algorithm development is going, and the direction of computer architectures, means that from an architecture point of view more and more compute is likely to get closer to the edge." – Simon Davidmann, CEO, Imperas Software [16]

However, edge computing comes with higher initial costs. Small-scale deployments typically range from $50,000 to $100,000, medium-scale projects fall between $100,000 and $500,000, and enterprise-level solutions can exceed $500,000 [17]. Despite these higher upfront expenses, edge processing offers a more predictable cost structure, as charges are tied to the number of usage instances, workload complexity, and memory requirements [14]. Additionally, it requires specialized expertise and can strain IT resources [1]. A notable example is MadeiraMadeira, which slashed its cloud costs by 90% by transitioning to Azion‘s Edge Computing Platform [14].

This trade-off between cost and performance highlights the critical decision founders must make when selecting the best approach for their platforms.

Side-by-Side Comparison: Cloud vs. Edge

| Factor | Cloud Rendering | Edge Processing |

|---|---|---|

| Upfront Costs | Minimal (no major hardware investment) | High (from $50,000 to over $500,000 [17]) |

| Ongoing Costs | High (compute, storage, networking fees) | Lower (maintenance and updates) |

| Latency | Higher (remote data center delays) | Lower (local processing minimizes delays) |

| Scalability | Excellent (easy cloud resource scaling) | Limited (restricted by local hardware) |

| Infrastructure Management | Minimal (provider-managed) | Complex (self-managed) |

| Bandwidth Costs | High (constant data transfer fees) | Low (local processing reduces transfer) |

| Technical Expertise Required | Low to Medium | High |

| Predictable Costs | Variable (usage-based) | More predictable |

Cloud rendering is a good choice for scenarios where upfront costs need to stay low and scalability is a priority, even if latency isn’t a critical factor. On the other hand, edge processing is ideal for applications requiring immediate responsiveness, despite its higher hardware costs and complexity. The choice ultimately depends on the specific demands of the platform and its users.

Decision Framework for Founders

Choosing the right rendering approach for your digital-twin platform is a balancing act between performance and cost. With 80% of CEOs identifying cost analysis as their top priority when evaluating new technology [17], having a structured decision-making process is essential. Founders must weigh technical needs against business realities to make informed choices.

Your decision impacts performance, user experience, and scalability. By 2023, 30% of manufacturers will use digital twins, leading to a 10% boost in effectiveness [10], highlighting the importance of selecting the right architecture for staying competitive.

Key Factors to Consider

When deciding on a rendering approach, start by addressing these critical factors:

- User location and distribution: If your users are globally dispersed, cloud rendering ensures consistent performance. For a local user base, edge processing can reduce latency.

- Acceptable delay levels: Applications requiring millisecond responses (e.g., production monitoring) benefit from edge processing, while those tolerating longer delays (e.g., architectural visualization) can rely on cloud rendering.

- Budget constraints: Cloud rendering has low upfront costs but can lead to high ongoing expenses for compute, storage, and networking. Edge processing involves higher initial investment but offers more predictable long-term costs [19].

- Data privacy requirements: Industries with stringent compliance needs may prefer edge processing to keep data local, while others can take advantage of cloud scalability.

- Expected growth patterns: Rapid user growth favors cloud rendering for its scalability, while steady, predictable growth aligns better with edge processing, which leverages fixed capacity.

"Digital twins enable ‘what-if’ analysis that guides better decision-making before any physical changes occur." – IBM [18]

By considering these factors, you can quantitatively evaluate your options and make a more informed choice.

Step-by-Step Decision Matrix

To simplify your decision, use a scoring system based on the factors discussed earlier. Assign a score from 1 to 5 for each factor, multiply by its weight, and total the results.

- Performance requirements: For latency-critical applications, edge processing scores higher (4–5) due to its responsiveness, while cloud rendering scores lower (2–3).

- Financial considerations: Cloud rendering scores higher (4–5) for minimal upfront costs, whereas edge processing scores lower (3–4) for predictable long-term expenses.

- Technical complexity: Cloud rendering scores higher (4–5) for ease of implementation, while edge processing requires specialized expertise, scoring lower (2–3).

- Scalability: Cloud rendering excels (5) with virtually unlimited scaling, while edge processing scores lower (2–3) due to hardware limitations. Hybrid approaches can balance these needs (3–4).

After scoring and weighting each factor, sum the totals to identify the approach that best aligns with your platform’s needs and constraints.

How AlterSquare Can Help

Using this framework, our 90-day MVP development cycle allows you to prototype multiple rendering strategies. This approach reduces risk and provides real-world data to guide your final decision.

Our expertise in AI-driven development is particularly valuable for digital-twin platforms, where real-time analytics and intelligent data processing are crucial. We can help design hybrid architectures that combine the strengths of cloud and edge processing, ensuring your platform delivers high performance while managing costs effectively.

If you’re working with an existing system or legacy infrastructure, our application modernization services can evaluate your current setup and recommend the most suitable rendering approach tailored to your technology stack.

With the digital twin market projected to reach $48.2 billion by 2026, growing at a CAGR of 20% [10], making well-informed architectural decisions now will set your platform up for success in this expanding market.

Conclusion

When it comes to choosing between cloud rendering and edge processing, the decision ultimately hinges on balancing performance, cost, and scalability. With one-third of manufacturers reporting that their data collection volumes have at least doubled in the past two years – and nearly 20% seeing a threefold increase [1] – the importance of selecting the right rendering method has never been more apparent.

"The ‘best’ choice hinges on the specific needs and priorities of the manufacturer. This involves careful consideration of factors such as cost, security, latency, and the reliability of Internet connections."

- Manish Jain, Product Leader for Edge Analytics and AI Applications, and Achim Thomsen, Director of Common Connected Applications, Rockwell Automation [1]

The rapid growth of the digital twin market – up 71% between 2020 and 2022 [21] – further underscores the need for informed decisions about system architecture.

Key Takeaways

- Cloud rendering is a cost-effective option with low upfront expenses, but it comes with higher latency, making it less ideal for real-time applications.

- Edge processing, on the other hand, offers faster response times for latency-critical operations, though it requires a larger initial investment and more complex infrastructure management.

- Hybrid strategies combine the best of both worlds, balancing the scalability of cloud solutions with the responsiveness of edge computing. According to IDC, 40% of data will be stored, managed, and analyzed at the edge [20], highlighting the growing relevance of distributed systems.

Organizations that effectively integrate digital twins have reported a 15% improvement in key sales and operational metrics and over a 25% boost in system performance [21]. These benefits are directly tied to choosing a rendering architecture that aligns with your platform’s unique needs.

Next Steps for Founders

Start by clearly defining your platform’s latency requirements and budget. Edge processing is ideal for applications that demand millisecond-level responsiveness, while cloud rendering is better suited for scenarios where higher latency is acceptable. These considerations align closely with earlier discussions about the trade-offs between cost, latency, and scalability.

Additionally, evaluate your data sensitivity and long-term growth projections. With the global digital twin market expected to reach $259.32 billion by 2032 [12], making the right architectural decisions now can position your platform for future success.

For many, hybrid approaches may strike the best balance, offering real-time responsiveness alongside scalable processing power. Tools like AlterSquare’s 90-day MVP development cycle provide an opportunity to prototype and test these approaches with actual user data, reducing the risks tied to these pivotal decisions.

Ultimately, your choice will shape not only your platform’s current performance but also its ability to scale effectively as your user base and technology needs evolve.

FAQs

How does combining cloud rendering and edge processing improve performance for digital-twin platforms?

A hybrid approach that blends cloud rendering with edge processing boosts the performance of digital-twin platforms by striking the right balance between scalability and responsiveness. Here’s how it works: cloud rendering takes care of heavy-duty tasks like creating detailed visualizations and running large-scale simulations using powerful remote GPUs. On the other hand, edge processing handles real-time, low-latency operations directly on the user’s device, ensuring interactions remain smooth and immediate.

This setup improves latency, keeps costs manageable, and enhances the overall user experience. By shifting resource-heavy computations to the cloud while handling time-sensitive tasks locally, businesses can enjoy quicker response times and lower operational expenses. It’s a smart solution for managing the demands of dynamic and complex digital-twin environments.

What should founders consider when choosing between cloud rendering and edge processing for their digital-twin platform?

When choosing between cloud rendering and edge processing, it’s essential to weigh factors like latency, cost, and data privacy to determine the best fit for your platform.

Edge processing works best for tasks that demand low latency or involve sensitive data. Since data is processed locally, it’s perfect for real-time applications like monitoring systems or control mechanisms. On the other hand, cloud rendering excels at handling large-scale, complex computations or long-term data analysis. However, it often requires a stable internet connection and may introduce higher latency.

For many digital-twin platforms, a hybrid model – blending edge and cloud processing – offers a practical solution. This approach ensures responsiveness by using edge processing while leveraging the scalability and power of cloud resources. Ultimately, the decision should align with your platform’s specific requirements and the expectations of your users.

What security risks should I consider with cloud rendering, and how can I reduce them?

Cloud rendering comes with its share of security challenges, such as data breaches, misconfigured settings, weak authentication, account hijacking, and denial-of-service attacks. These risks often stem from flaws in the cloud infrastructure, lax access controls, or even insider threats.

To reduce these vulnerabilities, take steps like implementing multi-factor authentication for stronger access control, encrypting sensitive data both during transfer and while stored, and performing regular security audits. It’s also essential to monitor cloud configurations in real time and have a solid incident response plan ready to tackle any issues quickly. Staying proactive with these measures can help create a safer environment for cloud rendering operations.

Related Blog Posts

- Cloud Rendering Showdown: Foyr vs. Coohom for Professional 3D Visualization

- Transitioning from Traditional CAD to Cloud-Based AEC Platforms: A Cost-Benefit Analysis

- AI Feature Comparison: How Leading AEC Visualization Tools Stack Up in 2025

- Enterprise Visualization: How Foyr Compares to 3D Cloud for Large-Scale Projects

Leave a Reply